The Evolution of AI on Mobile Devices

The evolution of artificial intelligence (AI) on mobile devices has been marked by significant strides in both hardware capabilities and software innovations. In the early days of smartphones, AI functionalities were limited and primarily reliant on cloud computing. Users depended heavily on internet connectivity for basic AI tasks, such as voice recognition and image processing. However, as mobile processing power has increased, devices have become more adept at handling advanced computations directly on the device itself.

With the introduction of specialized chipsets, such as Google’s Tensor and Apple’s A-series chips, mobile phones have acquired the capability to execute complex AI models efficiently. These advancements have laid the groundwork for running sophisticated algorithms that enable features like real-time language translation, facial recognition, and personalized digital assistants, all without needing a persistent internet connection. The shift towards on-device AI has significant implications for user experience, including considerably faster response times and reduced latency.

Additionally, on-device AI processing enhances user privacy, as sensitive data is managed locally rather than transmitted over the internet. This shift not only protects personal information but also aligns with growing concerns over data security and misuse. As mobile devices continue to integrate advanced AI features, the emphasis on local processing will likely expand, enabling even more personalized and efficient user experiences.

In summary, the progression of AI capabilities within smartphones reflects an ongoing commitment to enhancing both functionality and user security. As we look ahead, we can expect further breakthroughs that will allow mobile devices to leverage AI in ways that were previously thought impossible, paving the way for a more intelligent and intuitive mobile experience.

Google’s New Features for Android Phones

Google has recently unveiled a set of innovative features that empower Android phones with the capability to run on-device AI models, eliminating the reliance on internet connectivity. This advancement is underpinned by TensorFlow Lite, a lightweight version of Google’s open-source machine learning framework, which allows efficient execution of machine learning tasks directly on the device. By utilizing TensorFlow Lite, Android phones can perform complex computations without needing to send data to remote servers, enhancing both speed and privacy for users.

One notable application of these on-device AI features is in voice recognition. Users can now interact with their personal assistants seamlessly, as commands are processed locally, thus reducing latency and improving responsiveness. This development is particularly beneficial in areas with unstable internet connections, allowing users to access voice functionalities anytime and anywhere. Additionally, on-device processing enhances data privacy, as sensitive voice data does not leave the device.

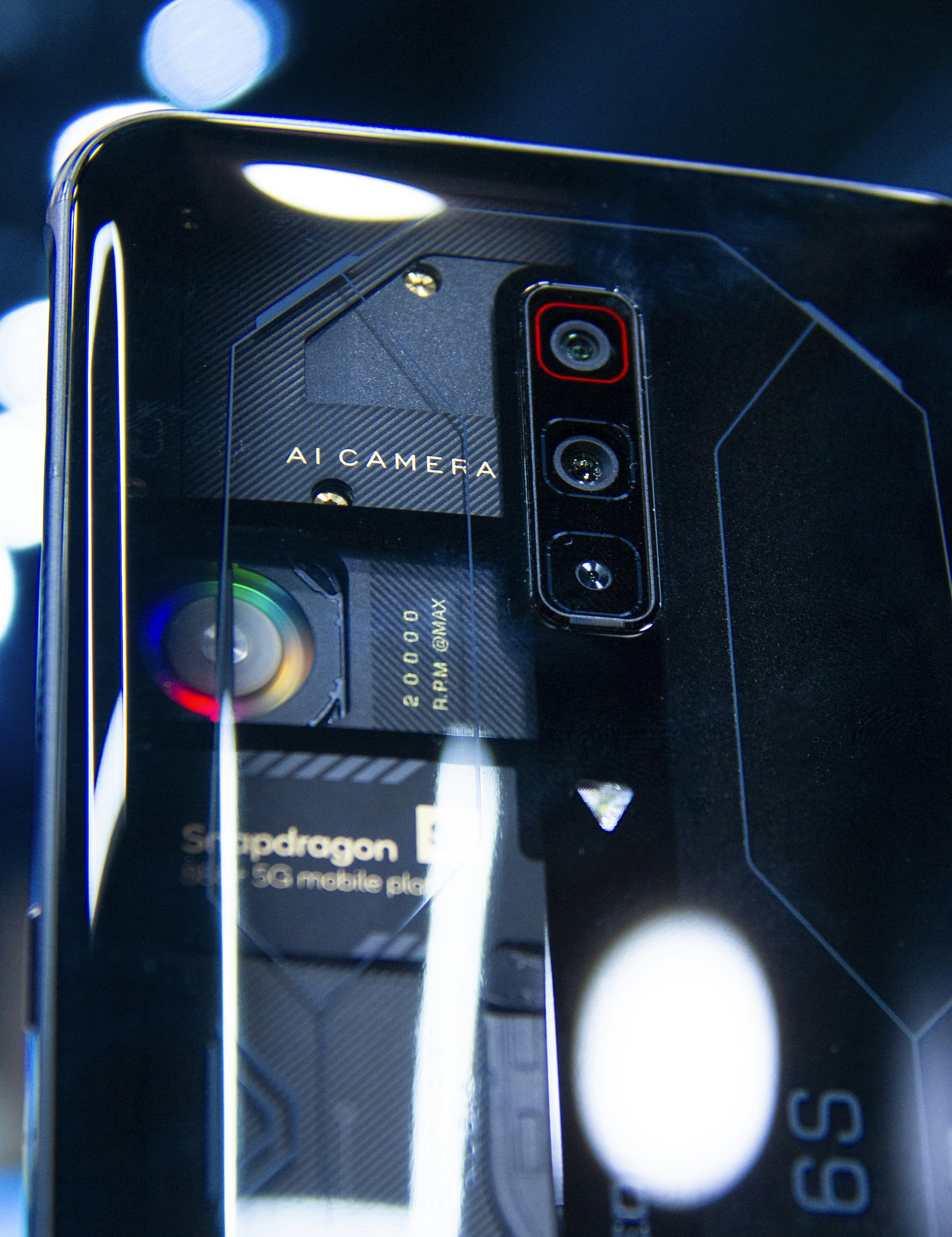

Moreover, image processing capabilities have seen significant advancements thanks to on-device AI technology. Users can enjoy improved real-time image processing for applications like photo enhancement and augmented reality, where low latency is critical. By leveraging on-device AI models, Android phones can quickly identify objects, apply filters, and even suggest edits, all while maintaining the integrity of the user’s data.

Various other applications highlight the versatility of Google’s new features. For instance, predictive text and autocorrect functions each benefit from enhanced machine learning capabilities, offering tailored suggestions as users type without needing constant internet access. By significantly enhancing performance across diverse applications, Google’s new features for Android phones are set to provide users with a more efficient and enjoyable smartphone experience.

Benefits of Running AI Models Locally

The advent of on-device AI models on Android phones provides numerous advantages that significantly enhance user experience. One of the most notable benefits is the improved speed and performance associated with executing tasks locally. By reducing reliance on cloud servers, which often introduce latency, applications can process data in real-time. This is particularly beneficial for tasks such as voice recognition or image processing, where every millisecond counts. For instance, features like Google Assistant can respond instantly to user commands without the delay brought about by hour-long data transfers over the Internet.

In addition to speed, running AI models locally enhances privacy and data security. Sensitive information remains stored directly on the device, eliminating the risks associated with transmitting data over the Internet. As users become increasingly aware of privacy issues, on-device processing assures them that their personal data, such as photographs or voice recordings, does not leave their phones. This aspect is crucial for users in sectors like healthcare or finance, where preserving confidentiality is paramount.

Moreover, local execution of AI models contributes positively to battery efficiency. Traditional cloud computing processes can drain battery life when continually interacting with remote servers. However, on-device AI models minimize such dependency and, as a result, reduce power consumption. Users can benefit from prolonged usage times without frequent recharging. For example, features like real-time translation can run indefinitely without severely impacting battery life, enabling users to communicate effectively even during extended travel.

In exploring real-world applications, various smartphone functionalities illustrate these advantages. From enhanced camera capabilities for improved image quality to advanced predictive text in messaging apps, the transition to on-device AI can transform everyday tasks into seamless experiences. Overall, the local execution of AI models on Android devices offers a compelling blend of speed, privacy, and efficiency, significantly improving user interaction with technology.

Future Implications and Advancements in Mobile AI

The recent introduction of on-device AI models by Google marks a significant leap forward in mobile technology, suggesting broad implications for the future. As mobile devices become empowered with sophisticated AI capabilities, the possibilities for growth and development appear boundless. One of the most significant forecasts is the integration of more complex AI models, capable of processing a vast array of tasks and providing users with tailored experiences. This enhancement may lead to the emergence of applications that were previously thought impossible on mobile devices.

Moreover, the advent of on-device AI opens new use cases across various sectors. In healthcare, mobile applications could leverage AI to process vast amounts of medical data, providing diagnostics and treatment recommendations without needing an internet connection. This ability to function offline will be particularly advantageous in remote areas where connectivity is limited. Similarly, in finance, advanced algorithms could analyze market data in real-time, offering personalized recommendations to users without relying on cloud infrastructure.

The entertainment industry is also poised to benefit significantly from technological advancements in mobile AI. With users’ increasing expectation for personalized content, AI models can analyze viewing habits and preferences, delivering tailored recommendations that enhance the user experience. These developments may also enable innovative storytelling techniques and interactive experiences that engage the audience like never before.

As these mobile AI advancements continue to mature, they may lead to a more integrated ecosystem of technology, one where the hardware and software of mobile devices work seamlessly to enrich everyday life. The implications of this transition will undoubtedly extend beyond individual industries; the ripple effects will likely resonate across the global economy, driving further investment and interest in mobile AI solutions.